What are the school’s responsibilities regarding assistive technology?

Page 6: Evaluating the Effectiveness of AT

As soon as the AT is implemented, the team should begin gathering information that will enable them to make sound decisions about its effectiveness. It is critical for teachers to monitor and troubleshoot AT use to ensure that the student receives and will continue to receive the greatest possible benefit. Some of the information they should collect includes:

As soon as the AT is implemented, the team should begin gathering information that will enable them to make sound decisions about its effectiveness. It is critical for teachers to monitor and troubleshoot AT use to ensure that the student receives and will continue to receive the greatest possible benefit. Some of the information they should collect includes:

- Student feedback to determine whether the student likes the AT and feels that it is helpful

- Observations to establish whether the student uses the AT and appears engaged and interested in using the device

- Performance data to determine whether the AT helps the student to complete the intended task(s)

Teachers should understand that AT outcomes should be measured using classroom-based content related to the student’s expected performance. For example, if a student is using a calculator, the measurement of progress should be related to the student’s ability to complete the assigned math work, as opposed to simply the student’s ability to use a calculator.

Listen as Marci Kinas Jerome discusses the use of observational data to determine whether AT is effective for a student. Next, listen as Megan Mussano discusses the importance of collecting and analyzing performance data.

Marci Kinas Jerome, PhD

Associate Professor, Special Education

and Assistive Technology

George Mason University

(time: 2:13)

Megan Mussano, MS CCC-SLP/L, ATP

Assistive Technology Coordinator,

Illinois K-12 School District

Instructor, University of Wisconsin-Milwaukee and Aurora University

(time: 2:05)

Transcript: Marci Kinas Jerome, PhD

It’s really important to collect data and observe students using assistive technology. A lot of times people think, “I’ve perceived the device. That’s the end of what I need to do.” But it’s really just the beginning. We need to use some different types of data. We can use observational data. We can use quantitative data. It’s really important to interview, to ask questions, because what we find is technology needs can change over time, and technology success can vary by environment. So, for instance, if I’m in a classroom and I’m working with a student, it may appear that the student doesn’t know how to use the device. But in reality there are some other issues going on. Maybe they didn’t know how to do the first part to make the technology work, so therefore they didn’t do anything. They couldn’t see well enough to see on the screen. They didn’t hear if it’s something read aloud. It’s not that they didn’t like the read aloud. They couldn’t hear it. It wasn’t loud enough. The voice wasn’t a good match for them. I need to turn the speed down. I need to turn the speed up. By observation, I will see some of those things. A lot of times it might be the teacher doesn’t know how to implement the technology, effectively incorporate it, or they don’t know how to use it. And so they don’t know how to help and support. So the students are only using it in classrooms where somebody can help them.

On some devices, I can see how many times in a day do they use it? Or how long do they use it for something like a spellchecker or talking word processor? I can observe and say, “Where do they use it? What kind of words? What kind of text did they use it to support them?” So all those observational things are really important because technology can change over time. So if you set everything up and do all the settings in September and you come back in May and the student’s are not using it, it might be that you need to change the settings. So some things you can do more simple. I’m going to give you three choices of a word-prediction. But now I see that you can handle nine. So I make it bigger or add more vocabulary or change the voice. I find that you go back to using the device, and sometimes kids do grow out of technology and it’s time to look for something more sophisticated or more advanced.

Transcript: Megan Mussano, MS CCC-SLP/L

There’s no way to truly consider assistive technology unless you truly collect data, and analyze and evaluate that data to determine whether the AT is working for a student. Data is the foundation of just about everything we do in the public schools. As you work through the AT consideration process, the team will come up with an assistive technology tool to trial. You have to collect data to determine if that tool is successful or not. Data can look very different depending what assistive technology you are attempting to trial. It could look different based upon the task that the assistive technology is supporting. It really varies.

A lot of times when we’re working with high-incidence disabilities, one of the issues or one of the tasks that they’re struggling with is reading or writing. When it comes to writing, one of the common tools is a word-prediction program or speech-to-text tool. When we look at the data, determine if it’s effective, effective could mean different things to different students. An assistive tech tool could be effective if it speeds up their writing, if it improves their spelling, if it improves the legibility of writing. Data can vary based upon the student’s needs. Data-collection can vary based upon the task you actually want the student to accomplish or the task the student needs help with. Data-collection is essential, but it can also be a short process. We want to make it practical. Often I have a student complete a three-minute writing prompt, just handwritten, after I train them on the AT tool. I will then give them a similar three-minute prompt and ask them to write again. I’ll do that a couple of times, comparing their handwritten writing to the typing or the AT tool, the word-prediction program. I will look to see what the data shows me. Are they spelling words more correctly? Are they producing more content? You look to see if it helps the student at all.

Collecting and Analyzing Data

To determine whether AT is effective, the AT implementation team needs objective, as opposed to subjective, data. Teachers can evaluate students’ performance by following the steps outlined below.

The type of data the teacher needs to collect will vary, depending on what aspect of the student’s performance they want to measure. To provide the most accurate picture of the changes in student performance they expect to see, the teacher can measure one of the following:

- Speed or rate: The number of times the behavior occurs within a given timeframe (e.g., the number of problems completed correctly in ten minutes)

- Accuracy: The number of problems or percent of the work that a student completes correctly (e.g., the percent of questions answered correctly on a test)

- Frequency: The number of times a behavior occurs within a consistent period of time (e.g., the number of times the student initiates a conversation during recess)

- Duration: The amount of time a student engages in a specific behavior (e.g., time on-task during independent classwork)

- Latency: The time between when a direction is given and when the student complies (e.g., how much time passes between when an in-class activity is assigned and the student begins working on it)

The teacher should first collect baseline data—that is, data on the student’s performance without the use of AT—on the aspect of student’s performance the AT is meant to support. It is important to collect these data before the AT is implemented so the teacher can compare the student’s performance before and after the AT is used. To collect these data, the teacher must use a data-collection form. These forms will vary depending on what aspect of the student’s performance is being measured. Click below to review examples of a variety of data-collection forms.

baseline data

glossary

While implementing the AT, the teacher should collect data on the student’s performance using the same method used to collect the baseline data. Once the AT has been implemented, a good rule of thumb is to collect four to six data points before evaluating its effectiveness.

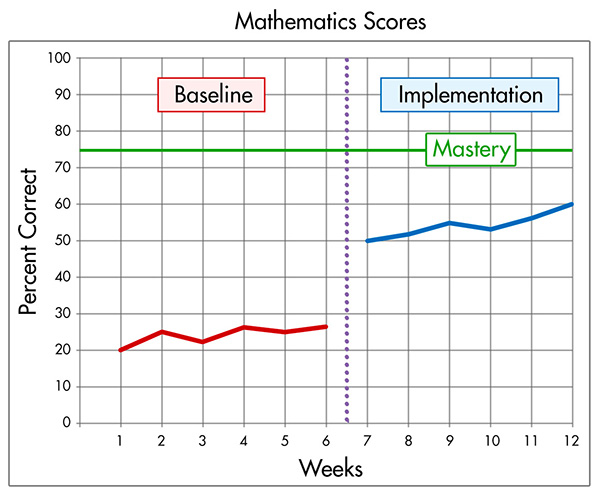

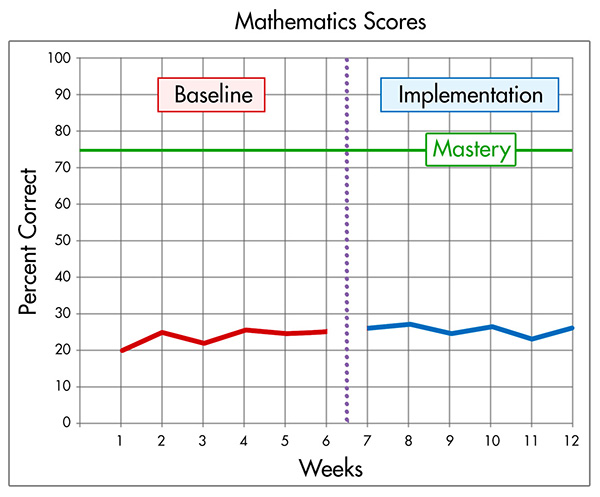

The teacher can compare the student’s performance data with AT to the baseline data to evaluate whether the AT has had the desired effect on that student’s performance. Often, the best way to do this comparison is to graph the data to create a visual representation of how the student has responded to the AT. In the graphs below, note the difference in data patterns for when an AT device or service is and is not effective.

Description: Left Graph

AT device or service is effective: This graph is labeled “Mathematics Scores” and demonstrates the results of the implementation of AT devices or services. The y-axis is labeled “Percent Correct” with segments spanning from zero to 100 in ten-step increments. The x-axis is labeled “Weeks” with segments spanning from one to 12 in one-step increments. The graph is bisected at the six-and-a-half-week mark with a dotted line. The first half of the graph is labeled “Baseline,” the second “Implementation.” A solid green horizontal line indicating 75 percent correct is labeled “Mastery.” A red line in the baseline half of the graph illustrates six weeks of progress: 20, 25, 22, 27, 26, and 27 percent correct, respectively. A blue line in the “Implementation” half of the graph illustrates six weeks of progress following implementation of the AT service or device: 50, 51, 55, 53, 57, and 60 percent correct, respectively.

Description: Right Graph

AT device or service is not effective: This graph is labeled “Mathematics Scores” and demonstrates the results of the implementation of AT devices or services. The y-axis is labeled “Percent Correct” with segments spanning from zero to 100 in ten-step increments. The x-axis is labeled “Weeks” with segments spanning from one to 12 in one-step increments. The graph is bisected at the six-and-a-half-week mark with a dotted line. The first half of the graph is labeled “Baseline,” the second “Implementation.” A solid green horizontal line indicating 75 percent correct is labeled “Mastery.” A red line in the baseline half of the graph illustrates six weeks of progress: 20, 25, 22, 27, 26, and 27 percent correct, respectively. A blue line in the “Implementation” half of the graph illustrates six weeks of progress following implementation of the AT service or device: 27, 28, 25, 26, 23, and 26 percent correct, respectively.

Note: These steps outline the general process for evaluating the effectiveness of a student’s AT. However, it is beneficial for the team to create an evaluation plan that details the specifics of the data collection (e.g., environment[s] in which data will be collected, activities during which data will be collected, the frequency of the data collection, the individuals responsible for collecting and analyzing the data, and the dates on which the data will be reviewed).

Revisiting the Challenge: Brooke’s Data

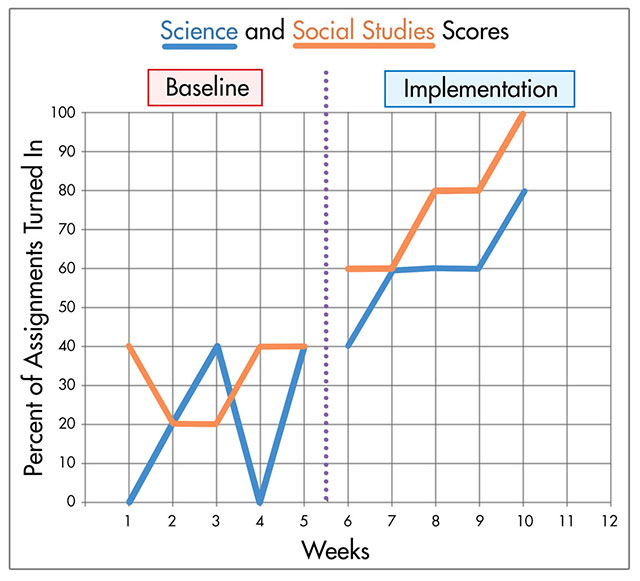

Recall that Brooke has difficulty completing and turning in science and social studies assignments. Follow along as the teachers evaluate the effectiveness of the task management program that Brooke uses to keep track of her science and social studies assignments.

Recall that Brooke has difficulty completing and turning in science and social studies assignments. Follow along as the teachers evaluate the effectiveness of the task management program that Brooke uses to keep track of her science and social studies assignments.

Note: For illustrative purposes, we will demonstrate the process for collecting data on the effectiveness of the task-management program only. In reality, the teachers would also collect data on Brooke’s use of Bookshare.

Step 1 – Determine how to measure the expected outcome. The science and social studies teachers will each record the number of assignments given for the week and the number of assignments Brooke turns in.

Step 2 – Collect baseline data. The teachers gather existing data on Brooke’s assignments from the weeks before she began using his new program. (See the baseline data collection form and graph below.)

Step 3 – Collect implementation data. After Brooke begins using her new AT, the teachers record how many assignments Brooke turns in each week. (See the implementation data collection form and graph below.)

Step 4 – Compare implementation data to the baseline data. To determine whether the AT was beneficial, Mr. Edwards (the special education teacher) gathers the data from both teachers and graphs them. When he compares Brooke’s baseline and implementation data, he concludes that the AT has had a positive effect on Brooke’s performance.

| Number of Assignments Completed | Percent of Assignments Completed | |||

| Date | Science | Social Studies | Science | Social Studies |

| Week 1 | 0/5 | 2/5 | 0% | 40% |

| Week 2 | 1/5 | 1/5 | 20% | 20% |

| Week 3 | 2/5 | 1/5 | 40% | 20% |

| Week 4 | 0/5 | 2/5 | 0% | 40% |

| Week 5 | 2/5 | 2/5 | 40% | 40% |

| Number of Assignments Completed | Percent of Assignments Completed | |||

| Date | Science | Social Studies | Science | Social Studies |

| Week 1 | 2/5 | 3/5 | 40% | 60% |

| Week 2 | 3/5 | 3/5 | 60% | 60% |

| Week 3 | 3/5 | 4/5 | 60% | 80% |

| Week 4 | 3/5 | 4/5 | 60% | 80% |

| Week 5 | 4/5 | 5/5 | 80% | 100% |

Graph Description

This graph is labeled “Science and Social Studies Scores” and demonstrates the results of the implementation of AT devices or services. The y-axis is labeled “Percent of Assignments Turned In” with segments spanning from zero to 100 in ten-step increments. The x-axis is labeled “Weeks” with segments spanning from one to 12 in one-step increments. The graph is bisected at the five-and-a-half-week mark with a dotted line. The first half of the graph is labeled “Baseline,” the second “Implementation.” A blue line in the baseline half of the graph illustrates five weeks of progress in science: 0, 20, 40, 0, and 40 percent of assignments turned in, respectively. An orange line in the baseline half of the graph illustrates five weeks of progress in social studies: 40, 20, 20, 40, and 40 percent turned in, respectively. A blue line in the “Implementation” half of the graph illustrates five weeks of progress following implementation of the AT service or device in science: 40, 60, 60, 60, and 80 percent of assignments turned in, respectively. An orange line in the “Implementation” half of the graph illustrates five weeks of progress following implementation of the AT service or device in social studies: 60, 60, 80, 80, and 100 percent turned in, respectively.

Activity

Diego, a 4th-grade student diagnosed with ADHD, struggles with staying on-task. He is easily distracted by peer conversations and hallway noises during independent class work, which occurs for the last 20-minutes of class. His IEP team recommends that his teacher allow Diego to use noise-cancelling headphones during independent classwork to help him remain on-task. The team expects that Diego will be less distracted by noise and his time on-task will increase. To evaluate the effectiveness of the AT, Diego’s teacher collects data on how long Diego engages in on-task behavior during independent instruction both before and after the AT is implemented. Using a stopwatch, she keeps track of how much time he is on-task during the 20 minutes of independent instruction. The data she collected is recorded on the forms below.

Diego, a 4th-grade student diagnosed with ADHD, struggles with staying on-task. He is easily distracted by peer conversations and hallway noises during independent class work, which occurs for the last 20-minutes of class. His IEP team recommends that his teacher allow Diego to use noise-cancelling headphones during independent classwork to help him remain on-task. The team expects that Diego will be less distracted by noise and his time on-task will increase. To evaluate the effectiveness of the AT, Diego’s teacher collects data on how long Diego engages in on-task behavior during independent instruction both before and after the AT is implemented. Using a stopwatch, she keeps track of how much time he is on-task during the 20 minutes of independent instruction. The data she collected is recorded on the forms below.

Now, it’s time to practice.

- Calculate the average duration of Diego’s behavior before and after his accommodation was implemented and type your answer into the text boxes below.

| Date | Duration |

| 10/4 | 7 minutes |

| 10/5 | 11 minutes |

| 10/6 | 12 minutes |

| 10/7 | 8 minutes |

| 10/8 | 12 minutes |

|

Average Time = 10 minutes |

| Date | Duration |

| 10/11 | 13 minutes |

| 10/12 | 15 minutes |

| 10/13 | 17 minutes |

| 10/14 | 18 minutes |

| 10/15 | 17 minutes |

|

Average Time = 16 minutes |

- Plot Diego’s progress on the graph by entering each of the duration data points from both the baseline data and implementation data boxes above. To begin, click the point for the duration on 10/4 (baseline data).

This graph is labeled “Diego’s Graph.” The y-axis is labeled “Duration in minutes,” the x-axis “Date.” The y-axis goes from zero to 20 in two-step increments. A dotted blue line bisects the graph. The first half is labeled “Baseline data,” the second “Implementation data.” The dates in the x-axis in the “Baseline data” side are 10/4, 10/5, 10/6, 10/7, and 10/8. The dates in the “Implementation data” side are 11/11, 11/12, 11/13, 11/14, and 11/15.

- Was the AT effective for Diego?

Making Data-Based Decisions

By evaluating the student’s data, the AT implementation team plans its next steps. If the AT seems to be benefiting the student, the team can decide whether to purchase the device or continue the service. If, on the other hand, the AT is not benefiting the student, the team should determine the reason for this and decide whether to make adjustments to the AT or use a different type of AT. To help with this task, the team can use guiding questions, like those that follow.

- Did the student use the AT consistently?

- Did everyone who needed training receive it?

- Did the AT allow the student to feel like a member of the class?

- Did the student enjoy using the AT?

- Was the student involved in the AT process (e.g., identifying or selecting the device)?

- Is the AT supported in the environment?

- Is the device too difficult to manage or use?

- Do teachers think the AT takes too much of their time?