Fidelity of Implementation: Selecting and Implementing Evidence-Based Practices and Programs (Archived)

Wrap Up

Once school personnel have selected an evidence-based practice or program, they need to have a plan in place to make sure it is implemented with fidelity. Failure to implement with fidelity could jeopardize the effectiveness of the practice or program. There are a number of actions school personnel can take to increase the likelihood that they will implement the new practice or program with high fidelity. These actions include:

Once school personnel have selected an evidence-based practice or program, they need to have a plan in place to make sure it is implemented with fidelity. Failure to implement with fidelity could jeopardize the effectiveness of the practice or program. There are a number of actions school personnel can take to increase the likelihood that they will implement the new practice or program with high fidelity. These actions include:

- Establishing an implementation team

- Training staff on how to implement the practice or program

- Providing ongoing training and support for the implementation of the practice or program

- Using existing manual(s) or create clear guidelines for the implementation process

- Monitoring and evaluating implementation fidelity

Cynthia Alexander highlights some important factors that affect fidelity of implementation (time: 1:35).

Transcript: Cynthia Alexander

Principals are aware of the challenges with implementation fidelity. One of the problems is there’s such a high turnover in programs in schools. We really don’t give them, for the most part, time to figure out if they work well or not, because in education we have a tendency to always go for the greatest and the latest. So, in some cases, fidelity is not even an option. It’s all in according to how long you stick with something. For those things that do have what I call “stickability,” it’s important to have a plan, and you can’t just say this is what we’re doing, this is the direction we’re going in. You have to have a plan on how you’re going to choose a program, implement the program, evaluate the program. The supports that will be offered and have specific benchmarks and checkpoints to make sure that the expectations are clearly established and followed through on. Fidelity of implementation also requires monitoring. A lot of times in schools, they’re not successful because the monitoring piece is out of place, and then you have to explain to the teachers or your staff that you’re not monitoring to check on them, or you’re not monitoring them to catch them doing bad. You’re actually monitoring to make sure that the program is giving them an opportunity for a greater level of success. Sometimes with fidelity of implementation, the challenge is that there is not an understanding at the classroom-level. So we have to make sure that’s there’s ongoing training to make sure that the teachers have opportunities to clarify their understanding of what the expectations are, what the actual end results will be, and then what the product from that individual class or individual student will be.

Click each school logo below for the conclusion of that school’s first year of implementing a new program and how fidelity influenced student outcomes.

|

|

|

Conclusion after First Year of Implementation

![]() Paige Elementary School

Paige Elementary School

When they first began monitoring the progress of their students, a number of teachers at Paige Elementary School struggled with the new practice. Some had difficulty administering the probes, others experienced problems entering data into the computer program, and a few found the organizational component to be overwhelming. On the other hand, the teachers reported that using the weekly data allowed them to respond more effectively to their students’ needs. The implementation team at Paige Elementary provided ongoing support through coaching and the district provided training sessions and booster sessions throughout the year as well. Fidelity data indicated that:

- By the end of the first semester, the majority of teachers were implementing progress monitoring with 85 percent fidelity or greater

- By the end of the second semester, all of the teachers were implementing with 85 percent fidelity or greater

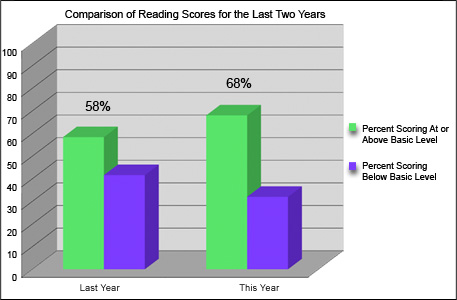

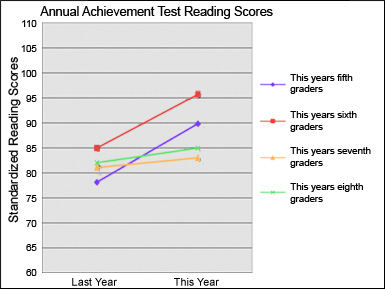

During this first year of implementation, school personnel did not meet their goal that 75 percent of their students would score at or above the basic level on the annual standardized reading test. However, they did see substantial gains in the students’ reading scores across all grades—68 percent of their students attained scores at or above the basic level on the annual standardized reading test. All in all, the teachers at Paige Elementary believe that progress monitoring has been successful and they are hopeful that they will reach––and perhaps exceed––their goal next year.

Listen as Lynn Fuchs reinforces the importance of implementing a practice or program as it was designed (time: 1:11).

Lynn Fuchs, PhD

National Center on Response to Intervention

Transcript: Lynn Fuchs, PhD

Fidelity of implementation is important because once you’ve selected a high-quality practice, whether it’s an assessment practice or an instructional practice, it produces accurate reliable assessment information or it results in the kinds of good student outcomes you’re hoping to promote. When a school does not use procedures in the same way that the research studies have used the procedures then it’s unlikely that the school will get the same kinds of outcomes that are documented in the studies. So a school has to follow the directions that the vendors of the assessment or instructional practices tell them represent the way that the tool is designed to be used. And the tool has to consistently be used in those ways in order for the user to expect the tool to produce high-quality information or high-quality outcomes for children.

(Close this panel)

Conclusion after First Year of Implementation

![]() Grafton Middle School

Grafton Middle School

When Grafton Middle School purchased a reading program several years ago, school personnel were disappointed that student reading outcomes did not improve. Because the program seemed self-explanatory, they initially decided not to provide training specific to its implementation. Even though the teachers at Grafton Middle School did not experienced great success with the program over the past few years, the implementation team’s recent research confirmed that the reading program was evidence-based and had been shown to be effective with groups of students similar to those at Grafton. After talking to a number of teachers, the team concluded that the initial implementation of the program most likely lacked fidelity. The team decides that specific PD and ongoing support would help the teachers implement the program as it was intended. As it turns out, the training and support have made a tremendous difference. Now that the teachers are implementing the program with fidelity, the reading scores of the students have increased significantly.

Joe Torgensen emphasizes the need for training, ongoing support, and monitoring to promote fidelity of implementation (time: 1:16).

Joseph Torgesen, PhD

Florida Center for Reading Research

Transcript: Joseph Torgesen, PhD

Principals and other leaders need to do whatever’s necessary to train, motivate, encourage teachers to implement high-quality programs with fidelity. And training is something that cannot be overlooked. A good reading program often requires new kinds of language on the part of teachers, new ways of explaining things. Certainly, new ways of modeling behaviors, new ways of monitoring student performance. And they’re all woven together in a complex set of behaviors, and it doesn’t make any sense to think that teachers could just pick this up by looking at the teacher’s manual. They really do need models. Just as kids need models for changing their behavior, teachers need models for changing their behavior. If you’ve done the work to select a strong program, it just seems downright silly not to go the next step and train and assist so that the major elements of the program can be followed by all the teachers in the school. Principals just need to recognize that selecting the program is not the only thing they need to do. There really is a very significant training, support, and monitoring effort that needs to follow in order to ensure that all teachers can profit and do profit from using the materials they’ve been given.

(Close this panel)

Conclusion after First Year of Implementation

![]() DuBois High School

DuBois High School

DuBois High School is still in the initial stages of implementing school-wide PBIS. During the first year, school personnel established a PBIS team, created an action plan, and obtained “buy in” from over 80 percent of the school staff. Also during this first year, ten volunteers from the DuBois staff attended PBIS training. When they returned, they began working with the remaining school staff to establish school-wide behavioral expectations, create classroom rules, employ consistent behavior management strategies, and determine a method of rewarding appropriate behavior. This initial preparation and the structure embedded in the PBIS framework set the stage for strong implementation. Further monitoring by the implementation team and the district has helped to address problem areas and to promote high fidelity.

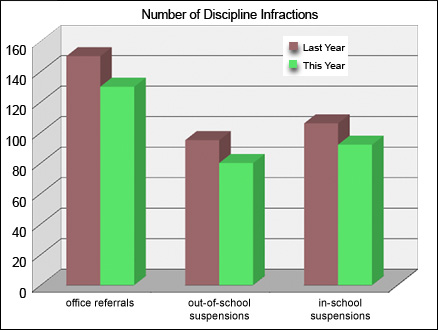

Whereas in the past, DuBois had rising numbers of office referrals and suspensions, the school is now seeing a drop in these infractions due to better behavior management in classroom and non-classroom areas. Additionally, students report liking the new method for rewarding appropriate behavior and, consequently, a more positive school climate seems to be developing. Changes have been slow but steady, and school personnel are optimistic that positive changes will continue. Due to the complexity of PBIS, school staff anticipate that it will take between three to five years to fully implement PBIS at DuBois.

George Sugai discusses the relation between fidelity, improved student outcomes, and long-term planning (time: 1:47).

George Sugai, PhD

Center on Positive Behavioral Interventions & Supports

Transcript: George Sugai, PhD

Fidelity of implementation is really important for a number of reasons. Knowing how and how well one is implementing an intervention or practice is important because we need to know whether or not we are actually affecting student outcomes. We really want to know if we’re maximally improving the student outcomes, as well. We’re also very interested in knowing whether or not the results as promised by the practice have been realized by us who are implementing. And if we have to make enhancements to the implementation, we really know that it’s still having an effect on those student outcomes. One thing that we’ve learned from the implementation literature is that continuous improvement is an important part of fidelity of implementation. You want to become more efficient with the intervention and still maintain the same level of outcomes, because it’s inevitable that some other intervention or initiative is going to come into the school and is going to compete for time and resources. So we’ve got to figure out how we can continue to maintain the same level of kid outcomes by increasing the efficiency by which the practice is implemented.

Implementation occurs in phases. There’s this discovery phase about what’s important, then there’s this try-it-out phase and see if it could work and then there’s this let’s go for it and put it in place and then there’s this ultimate level which is implementing with integrity and with sustainability. Each one of those phases requires that the system put in place the structures to support it. So it does become institutionalized. We’ve learned that it takes about 18–24 months for a school to adopt it with integrity, but it actually takes 36–48 months for that practice to become habit so that it is resistant to wavering and so forth.

(Close this panel)

Revisiting Initial Thoughts

Think back to your responses to the Initial Thoughts questions at the beginning of this module. After working through the Perspectives & Resources, do you still agree with those responses? If not, what aspects about them would you change?

How can school personnel identify and select evidence-based practices or programs?

How can school personnel effectively implement evidence-based practices or programs?

How can school personnel determine that they have effectively implemented evidence-based practices or programs?

When you are ready, proceed to the Assessment section.